Issue:

We have an application (our primary one) that the licensing structure is based on active device connections. Problem, we have more devices than licenses. This isn't a big deal since due to scheduling not all devices are connecting at once.

But, when user 1 on device 1 goes home and forgets to close the app then user 2 on device 2 has an issue (provided the other licenses are already taken). Closing out User 1 is fairly easy (reboot the computer), but not when there are 10+ workstations and they don't know which ones using the license. It suddenly because a whack-a-mole game that they didn't want to play.

Idea:

The license usage information is stored in a SQL table. So if we can make this information accessible they can see exactly where licenses are used.

Note: there are other ways to handle this, but they each had their own cons (ie auto logout of users on timer, etc). For now, simply reporting on it was the best option to make the whack-a-mole game quicker.

With PowerApps we could access the SQL table directly and tell the users! But wait, MS charges (IMO) a large amount of money for these types of connections. Can we do it without a premium connection? Yes, not as great, but it will do :)

Solution:

The idea is to take this in multiple parts to end up with an end product that we put on the main SharePoint page so they can quickly see what workstations are up for whacking.

- Export the data from SQL

- Upload it to a SharePoint List

- Display it in a pretty format

1. Export from SQL

First up we need to get the data out of SQL into a format we can use. Also, we want to do this easily, I'm not interested in messing around all day with figuring out why I can't get the export to Excel to work or other variations. PowerShell has a SQL Module and there's Export-CSV, so that's easy.

We'll need the SQL PowerShell module: Download SQL Server PowerShell Module - SQL Server | Microsoft Docs. In my case I already had it as I added it when I built the server and installed SQL.

Devart.com had a blog on this with a number of options and well written. Option 3 is what we are looking for How To Export SQL Server Data From Table To a CSV File (devart.com)

Invoke-Sqlcmd -query 'Select WSID, ConnDate FROM mydb.myschema.connections;' -ServerInstance S-SQLDEV | Export-Csv -Path D:\Reports\connections.csv -NoTypeInformation

Great, now I have a PowerShell script that I can schedule with Task Scheduler to run every so often, let's say 10 minutes, and dump a csv file for me. It's pretty lightweight so I'm not worried about the hit my SQL server will take from it.

Task Scheduler:

Under Program/Script enter Powershell.exe

For the "Add arguments" enter -ExecutionPolicy Bypass "D:\Scripts\MyScript.ps1" (of course using your path and script name)

And the account that runs the Scheduled task needs read permissions to the SQL Database in question (or you will get blank results).

2. Upload to SharePoint List

Okay, now we need to get our lovely csv to a SharePoint list. This is fairly easy, but there are some steps involved. In particular, we need PowerShell v7 and PnP PowerShell Module (at least I went this route). Also, I did this part on a different server as I didn't want to monkey around with uploading files to SharePoint on my SQL server. Instead, I used another PS script to move the file from the SQL server to my file server and then uploaded from there (optional step below).

Optional: as just stated, I didn't want this part on my SQL Server so I copied the file to a File Server first. I did this by added the below to my PS script on the SQL Server. (the service account used to run the scheduled task will need permissions at the remote location)

Copy-Item -Path "Microsoft.PowerShell.Core\FileSystem::D:\Reports\connections.csv" -Destination "Microsoft.PowerShell.Core\FileSystem::\\FileServerName\D$\Scripts\Connections\connections.csv"

I then proceeded with the rest of the steps on the File Server.

Installing PowerShell v7 is pretty easy... Installing PowerShell on Windows - PowerShell | Microsoft Docs

Optional: PSv7 doesn't include the ISE anymore.

Now they encourage you to use Visual Studio Code with the PowerShell Extension. Download Visual Studio Code - Mac, Linux, Windows

So I proceeded to download VSCode on my workstation so I can build the PSv7 script and run it on the File Server. (installed PSv7 on my workstation and the file server)

Once installed either install the PowerShell extension during the setup process or go to Settings (bottom left), Extensions and find / install.

SharePoint PnP PowerShell Module:

I decided to use the PS PnP Modules as I felt that it greatly reduced complexity of the scripts.

Here's a great writeup of SharePoint PnP by June with connections instructions: How to Use SharePoint PNP PowerShell Module in Office 365 (adamtheautomator.com)

Note: you'll want to run Install-Module "PnP.PowerShell" when logged in as the account that task scheduler will be set to run as or the script will fail.

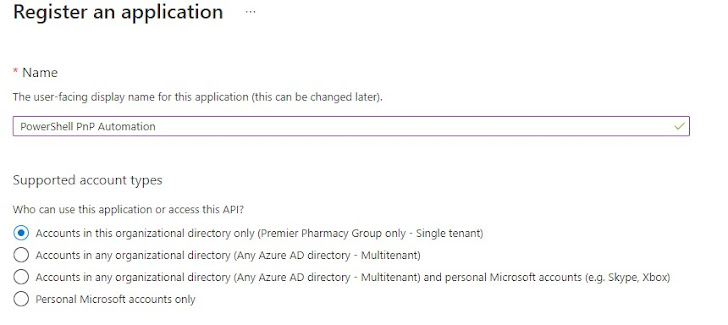

I had issues using his directions for non-interactive connections. So, I used the following instead which I created a separate post for: Did You Restart?: PowerShell PnP connection using Azure AD App Registration and Certificates (didyourestartyet.com)

Import CSV to SharePoint List:

Now we can import our csv to a SharePoint List to use as our "free database". Salaudeen nails it with his post on SharePoint Diary. SharePoint Online: Import CSV File into SharePoint List using PowerShell - SharePoint Diary

SharePoint List:

First let's setup a List on our SharePoint site. I'm not going to detail these steps as you should be fairly familiar with this already. I will point out however that SharePoint forces creation of column "Title" with the required flag on. Rather than renaming this column I just went into the list settings, selected the column, and turned off the required flag. Then I leave the Title column blank and ignore it.

I also created the necessary columns in the SharePoint list to match the columns in the CSV. So in my case WSID and ConnDate.

PowerShell:

I used the second option from the SharePoint Diary with PnP and some slight modifications.

- We need to initiate the non-interactive connection to SharePoint online

- I wanted to replace the list each time, not add or update.

Script is copied from the SharePoint Diary site linked above. I only added the connection and the line to get/delete all list contents.

#Parameters

$SiteUrl = "https://contoso.sharepoint.com/sites/mysite"

$ListName = "Connections"

$CSVPath = "C:\Scripts\Connections\Connections.csv"

#Connect to SharePoint Online non-interactive

Connect-PnPOnline $SiteUrl -ClientId 'yourclientIDfromPowerShellStep' -Tenant 'contoso.onmicrosoft.com' -Thumbprint 'CertificateThumbprintfromPowerShellStep'

#Get the CSV file contents

$CSVData = Import-CsV -Path $CSVPath

#Get all contents of the list and delete it! No add or update

Get-PnPListItem -List $ListName | Remove-PnPListItem -Force

#Iterate through each Row in the CSV and import data to SharePoint Online List

ForEach ($Row in $CSVData)

{

Write-Host "Adding Contact $($Row.WSID)"

#Add List Items - Map with Internal Names of the Fields!

Add-PnPListItem -List $ListName -Values @{"WSID" = $($Row.WSID);

"ConnDate" = $($Row.ConnDate);

};

}

#Read more: https://www.sharepointdiary.com/2015/09/import-csv-file-to-sharepoint-list-using-powershell.html#ixzz7etuTjTh8

Awesome script. Great job Salaudeen.

Now all I had to do was save the script and make a scheduled task that runs as the user that I setup the Powershell PnP automation certificate under. Note: must also install-module "PnP.PowerShell" under that account.

Now, the csv is uploading to the List file and overwriting all contents at the schedule I set (really, we're deleting all content and then uploading the csv...)

Since the data is now in a SharePoint List we can access it with PowerApps / PowerAutomate without paying for the premium connector. No, it's not real-time, but that hardly matters for some data.